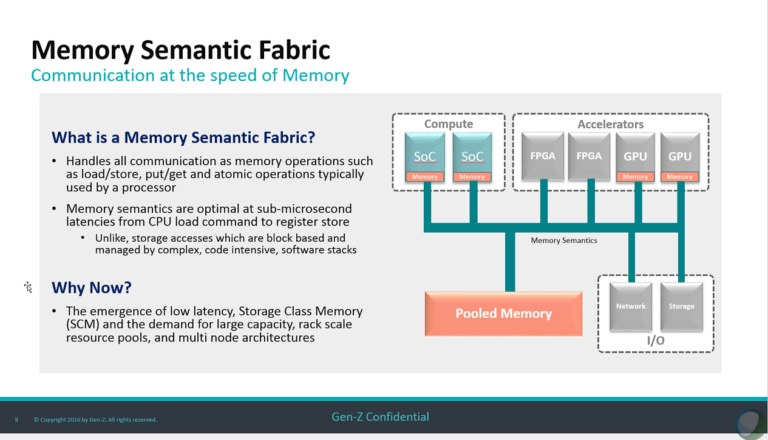

Industry powerhouses have joined forces to address an issue that has confounded system architects since the advent of multicore computing, one that has gained in urgency with the rising tide of big data: the need to bring balance between processing power and data access. The Gen-Z Consortium has set out to create an open, high-performance semantic fabric interconnect and protocol that scales from the node to the rack.

Gen-Z

brings together 19 companies (ARM, Cray, Dell EMC, HPE, IBM, Mellanox, Micron,

Seagate, Xilinx and others) and bills itself as a transparent, non-proprietary

standards body that will develop a “flexible, high-performance memory semantic

fabric (providing) a peer-to-peer interconnect that easily accesses large

volumes of data while lowering costs and avoiding today’s bottlenecks.” The

not-for-profit organization said it will operate like other open source

entities and will make the Gen-Z standard free of charge.

Vowing

to enable Gen-Z systems in 2018, the consortium’s mission is to address what it

says are obsolete “programmatic and architectural assumptions”: that storage is

slow, persistent, and reliable while data in memory is fast but volatile,

assumptions the consortium contends are no longer optimal in the face of new

storage-class memory technologies, which converge storage and memory

attributes. A new approach to data access that takes on the challenges of

explosive data growth, real-time application requirements, the emergence of

low-latency storage class memory and demand for rack scale resource pools –

these are the consortium’s objectives.

Kurtis

Bowman, director, server solutions, office of the CTO, at consortium member

Dell EMC, said that 12 of the member companies have worked for the past year to

develop what he called a “.7- or .8-level spec” on the fabric, “so there’s

still opportunity for new members to contribute to the spec, make it stronger,”

but enough work has been done “with the spec in proving out that the technology

itself is right.”

“We

get asked a lot, ‘Why the new bus?’” he said. “It’s because there’s really

nothing that today solves all the problems that we think exist. One is that

memory is flat or shrinking in the servers that we have today. So the bandwidth

per core is shrinking to a point where today we have less bandwidth per core

than we did in 2003. The memory capacity per core is shrinking, the I/O per core

is shrinking. It really comes down to there’s just not enough pins on the

processor to be able to get the requisite amount of memory and I/O that you

need.”

He

emphasized the need to solve this challenge as real-time workloads are

increasingly adopted, “You have to be able to quickly analyze the data coming

in, get some insights from that data, because as it takes longer to analyze

that data, your time to insights pushes out and makes it less valuable. So we

want to make it so it’s easier to get compute and data closer together and

allow those to be done” in a standardized way, across CPUs, GPUs, FPGAs and

other architectures. “All of them need access to the memory that’s available.”

Gen-Z

touts the following benefits:

- High bandwidth and low latency via a simplified interface based on memory semantics, scalable to 112GT/s and beyond with DRAM-class latencies.

- Support for advanced workloads by enabling data-centric computing with scalable memory pools and resources for real time analytics and in-memory applications.

- Software compatibility with no required changes to the operating system while scaling from simple, low cost connectivity to highly capable, rack scale interconnect.

Gartner

Group’s Chirag Dekate, research director, HPC, servers, emerging technologies,

said the consortium’s focus on data movement has important implications on

high-growth segments of the advanced scale computing market, such as data

analytics and machine learning, that utilize coprocessors and accelerators.

“These

technologies are crucial in delivering the much needed computational boost for

the underlying applications,” Dekate said. “These architectures are biased

towards extreme compute capability. However, this results in I/O bottlenecks

across the stack.”

He

said coprocessors and accelerators utilize the PCIe bus to synchronize host and

device memories, despite there being roughly three orders of magnitude

difference between the FLOPS-rate and the bandwidth of the underlying PCIe bus.

“This essentially translates to dramatic inefficiencies in performance,

especially in instances where there isn’t sufficient parallelism to hide the

data access latencies,” said Dekate. “This problem is only going to get worse

as the computational capabilities of core architectures evolve more rapidly

than the supporting memory subsystems, resulting in a fundamental mismatch

between data movement within a compute node and the floating point rate of

modern processors.”

Initiatives

like Gen-Z are crucial for addressing the data movement challenges that

emerging compute platforms are facing, he said. “The success of Gen-Z will

depend on the consortium’s ability to expand and integrate broader scale of

processor vendors to be able to have the broadest impact in customer

datacenters.”

Gen-Z

said it expects to have the core specification, covering the architecture and

protocol, finalized in late 2016. Proof systems developed on FPGAs will follow

with fully Gen-Z enabled systems on track for mid-2018. Other consortium

members include AMD, Cavium Inc., Huawei, IDT, Lenovo, Microsemi, Red Hat, SK

Hynix and Western Digital.